When ever you create a flat file using a PowerCenter Workflow, the name of the file will be static, which is specified in the Session 'Output Filename' property. There can be business cases where you need to generate flat files with dynamic file name, like add Timestamp with the file name . Here in this article we will discuss how we can generate flat files with dynamically changing name.

As the first step lets create the flat file definition using

Target Designer

Now lets add one new column 'FileName' using the 'Add File Name Column' button which is highlighted at the top right corner as shown in the below image. Dont get confused... this column is not going to be in the target file, this is the column based on the file name is dynamically changed.

Next step will be;

create the mapping to generate the flat file as shown below.

We wont worry about any transformation in the mapping except EXP_FILE_NAME Transformation. This expression transformation is responsible for generating the target file name dynamically.

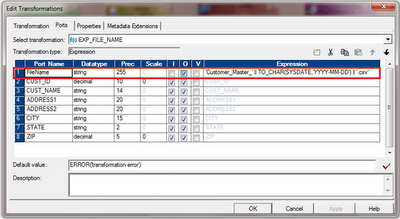

Lets look at the Expression Transformation below.

Here in this transformation, we have to create an output port 'FileName' and give the expression value as 'Customer_Master_' || TO_CHAR(SYSDATE,'YYYY-MM-DD') || '.csv'. Using this expression we are dynamically attaching the Date along with the file name. You can customize this expression as your need to change the file name.

Now from the expression transformation, map the 'FileName' port to the target table and all the remaining ports.

We are all done... Now

create and run the workflow; you will see the file is generated in your Target File directory with the date as part of the file name. There is not any specific setting required at the session level.

Arrange the salary in descending order in sorter as follows and send the record to expression.

Arrange the salary in descending order in sorter as follows and send the record to expression.